Slide 1

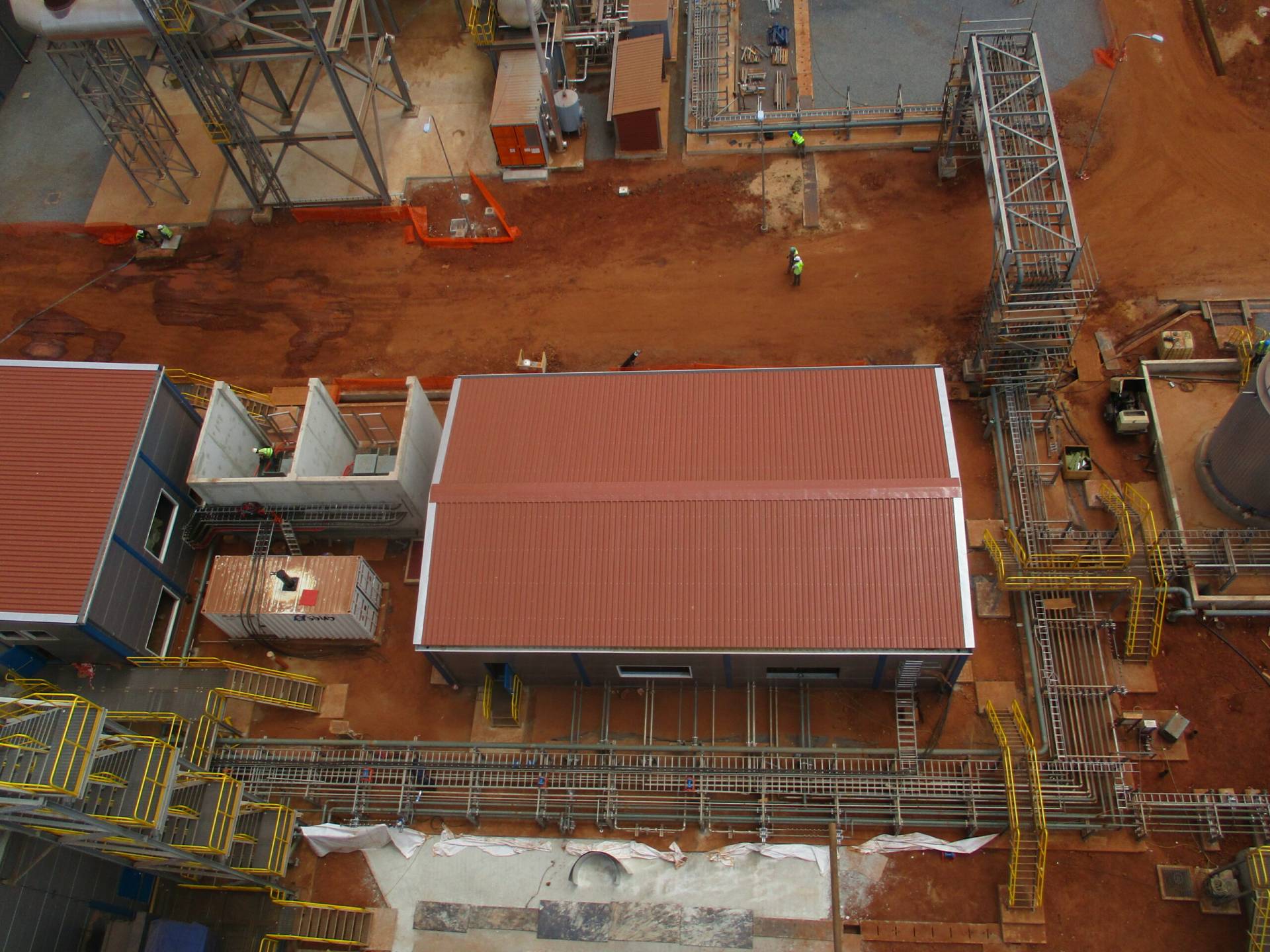

TTI, c’est d’abord une équipe qui, depuis des années réalise des chantiers au Sénégal comme à l’étranger (Mali, Maroc, Egypte, Togo, Cap-Vert, Burkina-Faso, Mauritanie, Guinée etc.).

Nous avons été présent sur tous les grands chantiers de ces dernières années au Sénégal : ICS2 ; SAR ;

Cimenterie du SAHEL ; SENELEC, GRANDE COTE, WARTSILA, MAN-DEISEL etc., comme indiqué plus bas sur nos références

Nos Métiers

Nos activités se concentrent essentiellement sur :

- Fourniture, fabrication et installation de Chaudronnerie industrielle : Cuves, citernes, et réservoirs

- Fourniture, fabrication et installation Tuyauteries industrielles,

- Fourniture, fabrication et installation de la Charpentes métalliques,

- Maintenance, dépannage et montage industriels : Montages, transferts industriels, Entretien d’usines,

- Montages mécaniques des usines industrielles

Nos Partenaires

Accessoirement, nous intervenons comme entreprise générale pour les projets d’envergure avec comme

partenaires

Précédent

Suivant

Contactez Nous

-

Téléchargez notre plaquette

-

+221 33 832 98 37

-

support@tti.sn

-

Lot N° E, Rocade Fann Bel-Air, B.P.34 184, DAKAR